“ChatGPT The significance of this is no less than the birth of the PC or the Internet”, Bill Gates praised this new trend.

A bleak Silicon Valley under the tide of layoffs, because ChatGPT once again ignited the light of hope.

Microsoft invested $10 billion in OpenAI and plans to integrate ChatGPT into its full range of products. On February 4th, Microsoft’s Bing integrated with ChatGPT-4 was launched briefly, and the speed is astounding.

In response to Microsoft’s aggressive layout, Google urgently recalled the two founders, Page and Brin, and began internal testing of a similar product, Apprentice Bard, last week. At the same time, Google also invested nearly $400 million in OpenAI’s competitor Anthropic to complete a similar binding between Microsoft and OpenAI.

Chinese technology companies are also following up, and a wave similar to AlphaGo in 2016 has once again set off.

On February 7, Baidu announced the name of its own ChatGPT product “Wen Xin Yi Yan”, and is expected to launch the corresponding product in March; on February 8, Alibaba also revealed that the chat robot ChatGPT is currently in the internal testing stage; On the same day, Netease Youdao CEO Zhou Feng also exclusively confirmed to Guangcone Intelligence that Netease Youdao may launch ChatGPT homologous technology products in the future, and the application scenarios revolve around online education; on February 9, Tencent also stated that it is promoting ChatGPT and AIGC in an orderly manner Special research in related fields.

For a time, not only did everyone in the technology circle talk about ChatGPT, but even many people began to use it to make money for ordinary users. UBS predicts that ChatGPT’s monthly active users reached 100 million in January this year, and it took only 2 months to achieve this goal. Before it, the fastest TikTok took about 9 months, which makes ChatGPT the Fastest growing consumer app to date.

ChatGPT is great, but don’t deify it.

Only AI left in Silicon Valley

When it comes to ChatGPT, we still have to start from Silicon Valley. Like most previous revolutionary or non-revolutionary technological breakthroughs such as the Internet, Web3, and Metaverse, ChatGPT still comes from Silicon Valley. However, unlike the previous contention of various technical directions in Silicon Valley, ChatGPT at this point is more like a choice that Silicon Valley has no choice.

In 2022, Silicon Valley will experience a wave of layoffs that will affect almost all technology companies. By February 2023, although the winter temperature has picked up, the chill in Silicon Valley has not yet faded. According to statistics from trueup.io, in the past month of 2023, 326 technology companies around the world have laid off a total of 106,950 people, most of them in Silicon Valley, and the scope of the attack is very extensive, such as metaverse, chips, autonomous driving, and SaaS is the hardest hit area.

Layoffs have always been a direct manifestation of an industry downturn.

Taking Wbe3 as an example, Coinbase plans to lay off 20% of the company’s employees in January 2023. This is the first listed compliant encryption trading platform in the United States, and the company has already laid off 18% of its employees in June last year. According to data from the research company PitchBook, in the fourth quarter of 2022, venture capital investment in the Wbe3 industry has fallen to the lowest level in two years, a drop of 75% from the same period in 2021.

In the field of chips, giants such as Micron, GlobalFoundries, and Intel were not spared. Among them, Lam Group laid off 1,300 people, and Intel lowered the salary of managers including the CEO and laid off hundreds of people. In the SaaS field, Salesforce announced on January 4 that it would lay off 8,000 people, accounting for about 10% of all employees. In terms of autonomous driving, there is news of layoffs including Waymo, Crusie, Tucson Future, and Nuro, an unmanned vehicle delivery company.

In addition, the metaverse, which was once poured with hope for the next generation of Internet, has finally reached an inflection point. In November last year, Meta confirmed the first large-scale layoffs in 18 years since its establishment. Zuckerberg apologized to the laid-off employees, “I was wrong. I am responsible for this layoff and how we got to where we are today.”Investment People are no longer optimistic about the future of Metaverse. The American investment company Altimeter Capital issued an open letter to Meta, calling on the company to cut staff costs by 20% and limit the expenditure on the “Metaverse” project to $5 billion per year.

Compared with Meta, which plunged headlong into the metaverse and turned around in a catastrophe, Microsoft, which has a slightly shallower foothold, decisively chose to abandon the old and start the new.

First of all, Microsoft has made a drastic cut to metaverse-related businesses. It announced that it will close the social platform AltspaceVR acquired in 2017 on March 10, and may get rid of the Mixed Reality Toolkit (MRTK) team. At the same time, Microsoft is investing heavily in AI. In early January, Microsoft planned to invest $10 billion in OpenAI and then announced that it would integrate ChatGPT with its full range of products including Bing search, Office, and Azure. By February 7, Microsoft had held a Bing conference integrating ChatGPT in Redmond.

In fact, from the first day of ChatGPT’s launch, the idea of ChatGPT subverting traditional search has spread like wildfire. So in the face of Microsoft’s aggressive layout, Google announced on February 6 that it will launch a chat robot Bard to compete with ChatGPT, and Google’s cloud computing department is also developing a project called “Atlas”.

On February 7, Google invested about $300 million in ChatGPT competitor Anthropic and acquired a 10% stake, which allowed Google and Anthropic to form a binding relationship similar to Microsoft and OpenAI.

In addition to Google and Microsoft, Meta released a similar chatbot three months before ChatGPT went live, but it didn’t gain much influence. In the words of Meta’s chief artificial intelligence scientist, Yann LeCun, “Meta’s Blenderbot is boring.”

Correspondingly, Amazon has also applied ChatGPT to job functions including answering interview questions, writing software code, and creating training documents. Amazon’s cloud unit has formed a small working group to better understand the impact of artificial intelligence on its business, an Amazon employee said on Slack. Even Apple announced that it will hold an internal AI summit next week.

But the change happened last September when Sequoia Capital released an article “Generative AI: A Creative New World.” It writes that generative AI has the potential to create hundreds of trillions of dollars in economic value.

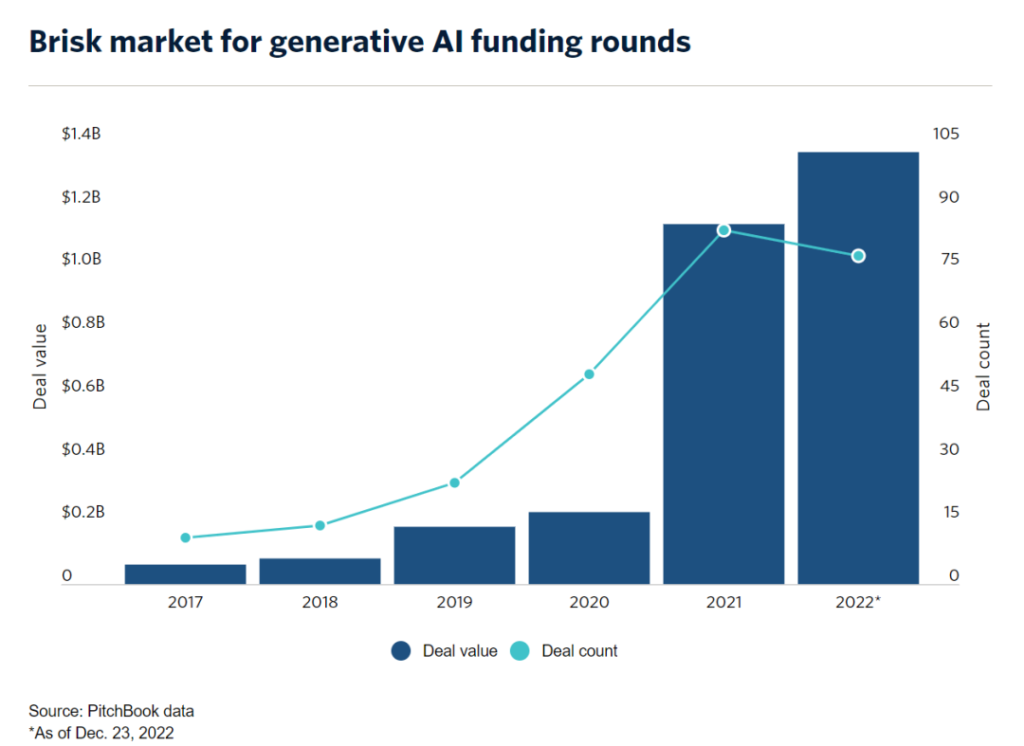

A statistic from PitchBook shows that in 2022, the investment circle will invest a total of US$1.37 billion (equivalent to approximately RMB 9.369 billion) in generative AI companies, almost reaching the sum of the past five years. These investments include not only leading companies such as OpenAI and Stability AI, but also start-ups such as Jasper, Regie.ai, and Replika.

Overall, from investors to large companies to entrepreneurs, a big curtain on AI 2.0 has been opened.

On the other hand, as far as ChatGPT is concerned, its technical principles are not new.

Yann LeCun mentioned: “As far as the underlying technology is concerned, ChatGPT is not particularly innovative, it is not revolutionary, although this is how the public perceives it.” has very similar technology to it. ChatGPT is a collective effort as it brings together several technologies developed by multiple parties over the years.

Li Di also mentioned that the technical concept corresponding to the large model has been born for several years. Whether it is domestic or foreign, there are already many large models trained using this technical concept, and it is only the field of focus in the process of fine-tuning. different.

To make an inappropriate analogy, ChatGPT is like an atomic bomb. Its principle has been written in textbooks, but most countries still cannot realize it, more because of engineering problems. For example, where do the hundreds of billions of data come from? How to label data, which data to label, how much to label, how to organize and train these data, and so on.

And these problems have not been published in OpenAI’s papers. Enterprises need to try and explore in their own model training, and then form experience and accumulate their own know-how.

Don’t deify ChatGPT

Although in terms of the underlying technology, there is actually not as big a gap as imagined at home and abroad. But when everyone sees that foreign technologies are in full swing, they will also worry that China will fall behind in this regard.

For a product with clear technical logic like ChatGPT, being slower does not affect the result. But if the product is poor, it is reflected in the ability to solve specific engineering problems. These parts need to be explored, stepped on pits, and it takes a long time to form experience. Behind this is the problem of technical level.

Compared with the English-dominated language environment in Europe and the United States, Chinese is an ideographic language, which is inherently inferior to English in terms of abstract generalization and logical ability. In addition, the corpus of the domestic Internet is relatively scarce, and there is not as much data accumulation as the English Internet.

Therefore, some people in the industry said that for the current development of domestic ChatGPT-like products, the most important thing is not the model, but the data.

“Natural language processing needs to go through a very rigorous reasoning process.” Li Di mentioned: “The large model represents a kind of violence in a sense, that is, compressing a large amount of data into a black box and then extracting it, which means Under the premise that the computing power is guaranteed, everyone can have the opportunity to use methods that have not been used before.”

On the other hand, ChatGPT is indeed a revolutionary existence, but it does not mean that it is the only direction in the NLP field in the future.

The first is the technical development of ChatGPT. The most important technology today is how to extract data well from a model after it is built. There are still many new methods that have not been tried, so it is not ruled out that in the future, using a smaller model to achieve a very large Possibility of good results.

Li Di mentioned that the entire industry is now pursuing this possibility because too large model parameters must mean very high costs and various other problems. “Today’s technical differences are far from reaching the level of becoming different technical genres, and it is far from being divided according to application scenarios.”

Secondly, from the perspective of the entire NLP technical route, there are currently two mainstream technical routes, namely the two-way pre-trained language model + fine-tuning represented by Google BERT, and the self-tuning represented by OpenAI’s GPT. Regression pre-trained language model + Prompting (instruction/hint).

Before the release of ChatGPT, BERT has always been the mainstream technical solution in the industry. The reason why it was robbed by ChatGPT is that it cannot solve all problems with one model like GPT, and has not shown the potential of general artificial intelligence.

But in fact, BERT has advantages in many specific scenarios. For example, in specific scenarios, BERT can use a smaller amount of data (ChatGPT is a model with 175 billion parameters pre-trained on the basis of a corpus of 300 billion words) , lower training cost to achieve the same performance.

For example, in a specific scenario inside a hospital, on the one hand, it cannot afford a model with a scale of 100 billion. ChatGPT is a sledgehammer in a hospital scenario, and the hospital cannot afford the cost of deploying ChatGPT. On the other hand, ChatGPT’s model is trained based on public data, but hospital data does not exist on the public network, so ChatGPT may be helpless in the face of hospital problems.

But BERT can adapt to such a scenario. It can use a smaller amount of data and a lower cost to train a model targeted at hospital data and scenarios, which is handier than ChatGPT in solving specific problems.

This is actually the difference between an all-around player who is involved in all fields and an expert who is deeply involved in a specific field. That is, in the specific scenario determined by the data, BERT has more advantages. ChatGPT is more suitable for open application scenarios without clear data and goals.

“Just like the large-scale alchemy that someone joked about at the NIIPS conference a few years ago, the current large-scale model is like everyone getting a good toy, but I don’t know what amazing things this toy can spit out.”

In 2016, when AlphaGo defeated Li Shishi, we also thought that the era of AI had arrived, and it would replace humans in many fields. We were also panicked and excited about this, but in fact, nearly 10 years have passed, and AlphaGo has not changing the world without even changing anyone.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

The thoughtful analysis has really made me think. Thanks for the great read!

Reading The Writing is like finding an oasis in a desert of information. Refreshing and revitalizing.

The voice shines through The writing like a beacon, guiding us through the darkness of ignorance.

I appreciate how you’ve explained things so clearly. It really helped me understand the topic better.

This is a brilliant piece of writing. You’ve nailed it perfectly!

The writing has the warmth and familiarity of a favorite sweater, providing comfort and insight in equal measure.

I find myself lost in The words, much like one would get lost in someone’s eyes. Lead the way, I’m following.

What a refreshing take on this subject. I completely agree with The points!

Making hard to understand topics accessible, you’re like the translator I never knew I needed.

The fresh insights were a breath of fresh air. Thank you for sharing The unique perspective.

The words are like a melody, each post a new verse in a song I never want to end.

The post was a beacon of knowledge, lighting up my day as if you knew just what I needed to hear.